[Photo: Lea Suzuki/The San Francisco Chronicle via Getty Images]

A Systems Engineering Reflection on Ethics, Risk, and Speaking Uncomfortable Truths

Scott Adams, the creator of the satirical comic strip Dilbert, died on Tuesday, January 13, 2026, at the age of 68 [1]. Marking the end of a career that left a lasting imprint on how engineers and technologists understand organizational dysfunction. For much of my professional career, Adams occupied a unique and, frankly, useful place in the engineering world. Through Dilbert, he became a satirist of corporate dysfunction, an informal organizational theorist, and a cultural translator between engineers and executives. His work gave voice to frustrations that many technical professionals experienced daily but struggled to articulate within hierarchical organizations. [2] Most of us have encountered some version of the clueless “pointy-haired” boss or the disengaged “Wally” at some point in our careers. It is hard to count how many Dilbert cartoons were clipped and pinned to cubicle walls over the years. We laughed not because the situations were trivial, but because humor was often the only safe way to acknowledge how absurd they could be.

A Satirist Who Modeled Systems Thinking

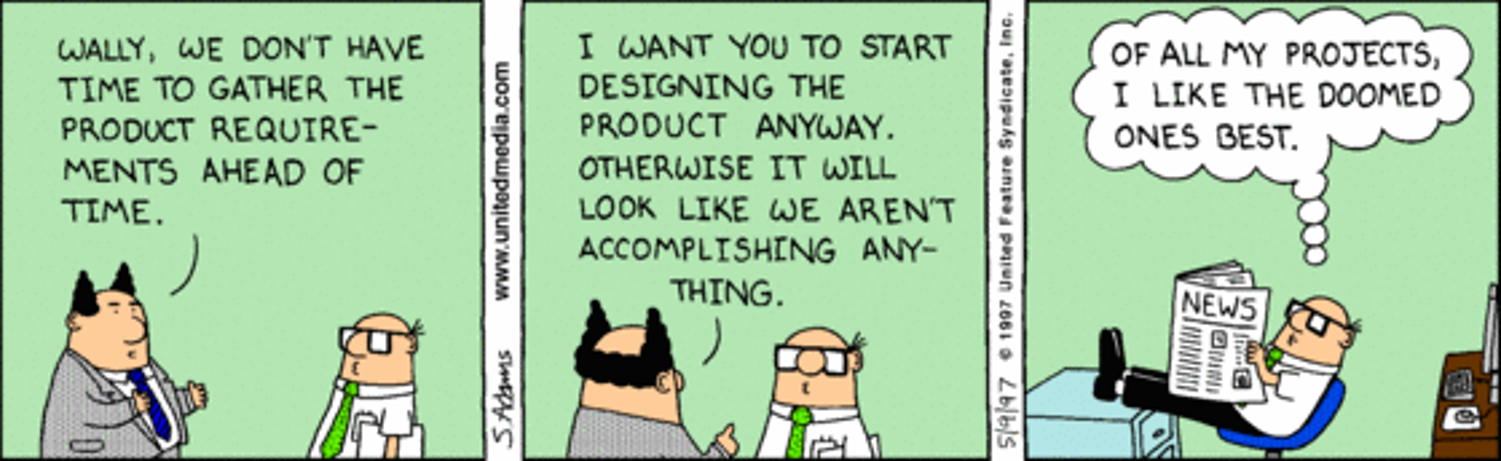

Dilbert was never merely about inept leadership, organizational pathologies, or dysfunctional team dynamics. At its core, the strip was a systems critique. Adams consistently highlighted misaligned incentives, broken feedback loops, and decision authority detached from technical reality. [3] Engineers recognized themselves in Dilbert not because the character was heroic, but because he was constrained by a system that behaved exactly as it was designed to.

In my own Systems Engineering training, I occasionally used Dilbert cartoons to surface uncomfortable but productive conversations. Even the Aug 2007 INCOSE SE Handbook v3.1 included a Dilbert cartoon as Figure 7‑1, showing how doomed projects are when design proceeds without understanding requirements [4]. Great stuff.

A single strip could quickly expose issues around requirements ambiguity, ineffective escalation paths, or management incentives that unintentionally reward failure. The humor lowered defenses, but the lessons were serious. In that sense, Adams performed an educational function long before terms such as socio-technical systems and organizational risk became standard language within Systems Engineering.

Looking for Real Change

Beyond Dilbert, Adams’ books, including How to Fail at Almost Everything and Still Win Big, Reframe Your Brain: The User Interface for Happiness and Success, and Loserthink: How Untrained Brains Are Ruining America, focused heavily on persuasion, influence, and self-improvement [5]. These themes resonated with engineers who often discover that technical correctness alone is insufficient to move organizations. Adams argued that understanding how people are persuaded, how incentives operate, and how narratives shape decisions is essential to improving complex organizations, as well as surviving them.

While his tone was sometimes provocative, the underlying message aligned with an uncomfortable Systems Engineering truth: large systems rarely change based on data alone. They change when information is communicated in ways that decision-makers can absorb and act upon.

Challenging Assumptions within a Broken System

Over the past ten years, Adams increasingly turned his attention toward questioning dominant social and institutional narratives. He publicly predicted Donald Trump’s rise at a time when most commentators dismissed the possibility. [6] He was an outspoken critic of the COVID-19 pandemic response and the rapid adoption of ESG-driven corporate practices.

In his daily podcast, Real Coffee with Scott Adams, he criticized mask mandates, lockdowns, and vaccine mandates, framing his objections in terms of persuasion, incentives, and second-order effects rather than partisan ideology. He argued that pandemic-era policies relied more on authority than transparent risk communication and warned that coercive compliance would erode economic stability, social cohesion, and institutional trust [7].

In parallel, Adams increasingly used Dilbert to satirize ESG initiatives and corporate diversity programs, which he viewed as performative responses to social pressure rather than risk-informed management. That critique contributed to the removal of Dilbert from several major newspaper groups in 2022 and accelerated his migration away from mainstream platforms.[8]

His controversial take on a Rasmussen poll, using what he regarded as extreme hyperbole, which he says critics took out of context [9], led to hundreds of newspapers and his distributor, Andrews McMeel Universal, severing ties. [10]

Whether one agrees with his conclusions or not, the Systems Engineering significance lies in the pattern itself: Adams repeatedly challenged assumptions that were widely treated as settled, emphasized incentives and downstream effects, and accepted personal and professional risk as the cost of sustained dissent.

Systems Engineering Ethics and the Obligation to Speak Out

The INCOSE Systems Engineering Handbook is clear that ethical practice requires recognizing hazards and risks and communicating concerns clearly and promptly, even when doing so is inconvenient or unpopular [11]. This obligation is not limited to technical subsystems. It applies equally to organizational, social, and policy-level systems when those systems have the potential to cause harm.

Both the Risk Management and Quality Management processes reinforce this expectation [12][13]. Risk must be identified, escalated, and addressed rather than suppressed. Quality processes depend on transparent reporting of nonconformities and deviations, not on punishing those who surface them. When challenges are silenced rather than examined, the Handbook would diagnose the outcome not as discipline but as a process failure.

From that lens, Adams’ willingness to question accepted narratives can be interpreted as aligned with the ethical expectations placed on Systems Engineers. Challenging dominant assumptions is often the first step in identifying systemic risk. It is also frequently the step that organizations resist the most.

The Cost of Speaking Out

Adams paid a visible price for his positions. He lost platforms, professional standing, and much of what he had built over decades. Systems Engineers should not be naive about this reality. The Handbook does not promise protection from consequences. What it does establish is that silence in the face of perceived risk is itself an ethical failure.

To me, Adams’ story serves as a reminder that courage in Systems Engineering is not limited to technical heroics. Sometimes it looks like raising an uncomfortable question, knowing full well that the system may respond defensively. Dilbert taught us to laugh at broken systems. Adams’ later career reminds us that broken systems often punish those who point them out.

As a Systems Engineer, are you prepared to question and challenge the very system in which you operate when you believe a risk or danger is being ignored?

Optional Reader Resource

This is a practical checklist to help Systems Engineers when they sense risk, quality, and ethics are drifting out of alignment, yet lack a practical tool to diagnose and articulate those concerns before consequences become unavoidable.

References

CBS News. “Scott Adams, creator of the Dilbert comic strip, dies at 68.” January 13, 2026. https://www.cbsnews.com/news/scott-adams-dies-age-68-dilbert-comic-strip/

Bregel, Sarah. "‘Dilbert’ Taught White-Collar Workers How to Talk About Hating Work." Fast Company, 14 Jan. 2026, www.fastcompany.com/91475080/dilbert-taught-white-collar-workers-how-to-talk-about-hating-work. Accessed 20 Jan. 2026.

Ennes, Meghan. "How 'Dilbert' Practically Wrote Itself." Harvard Business Review, 18 Oct. 2013, hbr.org/2013/10/how-dilbert-practically-wrote-itself. Accessed 20 Jan. 2026.

Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities. Edited by Cecilia Haskins, version 3.1, International Council on Systems Engineering (INCOSE), 2007. INCOSE-TP-2003-002-03.1. p. 7.2 of 20.

"Scott Adams: Books, Biography, Latest Update." Amazon, https://www.amazon.com/stores/Scott-Adams/author/B004N47QVK Accessed 20 Jan. 2026.

Weissmueller, Zach. "As Trump Coasts to the Nomination, Remember That the Cartoonist Behind Dilbert Saw It All Coming." Reason, 7 May 2016, https://reason.com/2016/05/07/as-trump-coasts-to-the-nomination-rememb/

Adams, Scott. "Episode 1460 Scott Adams: I Admit I Was Wrong About the Pandemic." YouTube, uploaded by Real Coffee with Scott Adams, 6 Aug. 2021, www.youtube.com/watch?v=2-lxE8Jm8Cc.

Quinson, Tim. "‘Dilbert’ Becomes the Voice of ESG Opposition." Bloomberg, 21 Sept. 2022, www.bloomberg.com/news/articles/2022-09-21/-dilbert-becomes-the-voice-of-the-esg-opponents-green-insight. Accessed 20 Jan. 2026.

Ross, Janell. “The Death of Dilbert and False Claims of White Victimhood.” Time, 1 Mar. 2023, https://time.com/6259311/dilbert-racism-scott-adams/. Accessed 20 Jan. 2026.

Helmore, Edward. "Dilbert cartoon dropped by US newspapers over creator’s racist comments." The Guardian, 26 Feb. 2023, www.theguardian.com/us-news/2023/feb/26/dilbert-cartoon-dropped-by-us-newspapers-over-creators-racist-comments. Accessed 20 Jan. 2026.

INCOSE Systems Engineering Handbook: A Guide for System Life Cycle Processes and Activities. Edited by David D. Walden et al., 5th ed., Wiley, 2023. International Council on Systems Engineering. Section 5.1.4 Ethics.

Ibid., 2.3.4.4 Risk Management Process.

Ibid., 2.3.3.5 Quality Management Process.